Contrary to a lot of information online, especially from AI search responses, you CAN download more than 25,000 rows of data from the Google Search Console (GSC) API…a lot more.

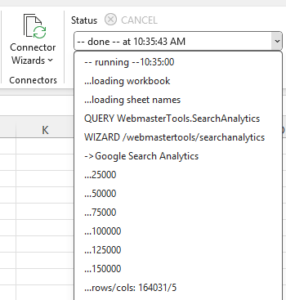

Analytics Edge makes multiple queries automatically to get all your data

At Analytics Edge, I work with a lot of APIs, and many of them are ‘paginated’, meaning you can download a ‘page’ of data, then another ‘page’, and another. With the GSC API, the maximum page size is 25,000 rows (the default is 1,000). Getting more data is a simple loop while incrementing the ‘startRow’ parameter by the value of the ‘rowLimit’ parameter. Join all the results together into a single table, and you can get all the data that is available.

With the proliferation of Python wrappers for building API queries, you might be tempted to “vibe code” a quick solution, but there are some aspects to working with the API that the AI’s don’t seem to be aware of because they aren’t well documented anywhere.

Analytics Edge makes a variety of no code products that let you work with the GSC API without knowing anything about the API. They are all desktop tools (Mac and PC) designed to make it easy to get your data. Exporter GSC is free.

Get more than 50,000 rows from the GSC API

In the documentation, another often-confused limit is the “maximum of 50,000 rows of data per day per search type” data limit. That does not mean you can only download 50,000 rows…it means if you build a query for 30 days of data, the limit is 50,000 rows for each of the 30 days: potentially 50,000 * 30 = 1,500,000 rows. (Note: the API will only return 1,000,000 rows per query, but you can split your query by date or other dimension to possibly get more)

There are implications for this per-day limit, though, especially if you include multiple dimensions (columns) in your query. Let’s take an example of a website with 10,000 pages, each getting maybe an average of 5 different queries in a single day, and poof – you hit the limit with Page and Query in your API call. Add more fields and the situation gets worse…

More detail = less data

Google Search Console query data is filtered for privacy (“anonymized”), so as you ask for more detail, the filtering kicks in and you get partial data. They also show only the top results (“Due to internal limitations, Search Console stores top data rows and not all data rows“). If you absolutely want more of the gory detail, then you need to step up to using the BigQuery export.

The privacy filtering can have a big impact as the number of impressions drops, meaning a lot of long-tail queries will disappear as you try to refine a query by adding device or country. Various studies have shown that the impact can be significant and is very website and topic-specific, but everyone will see the degradation as you ask for more detail.

Analytics Edge lets you query by week or month. Bigger queries = more data = less privacy filtering.

Sorted by (only) clicks, descending

The API does not have a ‘sort’ option — the data is always delivered sorted by clicks, descending. That much is well documented, but there are a couple of gotchas:

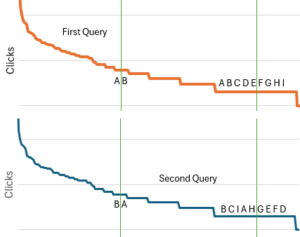

There is no secondary sort, and the order of multiple rows with the same number of clicks may be random. This means that if you make the same query twice, the order of some rows may change…and it does!

The sort is specific to the individual query, which means there does not appear to be any caching between API calls. When you ask for more data, it makes a new query of the data and delivers the results starting at the ‘startRow’ you specified.

A reminder: the number of clicks flattens out as it drops (a long tail curve), so there is more likelihood that there will be multiple rows at the end of the ‘page’ with the same number of clicks. As you pull more data, the next ‘page’ may start with the same number of clicks the previous one ended with.

A reminder: the number of clicks flattens out as it drops (a long tail curve), so there is more likelihood that there will be multiple rows at the end of the ‘page’ with the same number of clicks. As you pull more data, the next ‘page’ may start with the same number of clicks the previous one ended with.

Because there is no secondary sort, the second query (to get the data for the second ‘page’) could be in a different order, and the second page may include some of the same rows delivered in the first query — duplicate records!

It may also not include some data that was also missing from the previous query. e.g. in the image: first query, first page, returns A. Second query, second page, returns A. A is duplicated; B is missing.

So…pull more data, expect more duplicates and missing data. The missing data is unfortunate, but the duplicates can mess up subsequent processing, so best to remove them.

Analytics Edge products deduplicate the data, and offers post-query sorting options so you can sort by any column combination.

A word about quotas

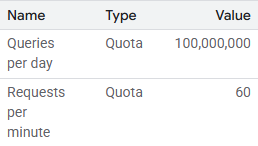

If you actually work with APIs, then you tend to read the documentation, especially the parts about quotas. I got concerned when I read a documented recommendation to run “a query each day for one day’s worth of data[…]. Running a daily query for one day of data should not exceed your daily quota”. Given what I covered above, that means 2 queries, each pulling 25,000 rows… should not exceed your quota?…which is funny since the daily quota for a project is 100 million queries; that is a little bigger than 2. Seems some of the AI’s didn’t get the joke and tend to reinforce the written word with weird quota cautions.

If you actually work with APIs, then you tend to read the documentation, especially the parts about quotas. I got concerned when I read a documented recommendation to run “a query each day for one day’s worth of data[…]. Running a daily query for one day of data should not exceed your daily quota”. Given what I covered above, that means 2 queries, each pulling 25,000 rows… should not exceed your quota?…which is funny since the daily quota for a project is 100 million queries; that is a little bigger than 2. Seems some of the AI’s didn’t get the joke and tend to reinforce the written word with weird quota cautions.

Reality: do not worry about the daily quota unless you really abuse the system.

And if you space your queries a whole 1 second apart, don’t worry about the per-minute quota either.

Analytics Edge uses regular Google logins (via Oauth) and manages the quota so you don’t have to worry about any of that.

Long tail analysis caution

Since long tail queries tend to have fewer clicks, they fall at the bottom of the clicks-descending sort. That is the region of higher privacy filtering and potential missing data (depending on the queries). Depending on your analysis, you may end up including some low-click data — just realize that data is likely incomplete. What you discover might be worth checking in to, but realize the results could be a bit different the next time the analysis is run.

Bottom line

You can get a lot more than 1,000 rows from the Google Search Console API.

You can get a lot more than 25,000 rows from the Google Search Console API.

You can get a lot more than 50,000 rows from the Google Search Console API.

If you want to ‘vibe code’ a solution, make sure to

- do paginated queries to get all the pages of data

- join the results

- deduplicate the rows

- manage your queries to 1 second apart

- realize you can get a working solution from Analytics Edge